Table of contents

- In light of this, let's explore cloud computing architecture.

- Basic Cloud Computing Architecture

- Why should we use cloud architecture?

- What is the operation of cloud architecture?

- Fundamental Cloud Architectures

- Workload Distribution Architecture

- Dynamic Scalability Architecture

- Architecture for Elastic Resource Capacity

- Cloud Bursting Architecture

- Redundant Storage Architecture

- Although there are many different architectures, it would be arduous and uninteresting for new students to learn about them all. Therefore, I'll only add two of them in each category.

- Advanced Cloud Architectures

- Specialized Cloud Architectures

- Why is cloud architecture advantageous?

- What are optimal practices for cloud architecture?

"I don't need a hard disk in my computer if I can get to the server faster... carrying around these non-connected computers is byzantine by comparison." -- Steve Jobs, late chairman of Apple (1997).

The way technological elements come together to create a cloud, where resources are pooled through virtualization technology and shared over a network, is known as cloud architecture. The elements of a cloud architecture are as follows:

- An entrance platform (the client or device used to access the cloud)

- A supporting platform (servers and storage)

- An online delivery system

- A system

By combining their respective strengths, these technologies provide a cloud computing architecture on which software may operate, enabling end users to take use of the strength of cloud resources.

In light of this, let's explore cloud computing architecture.

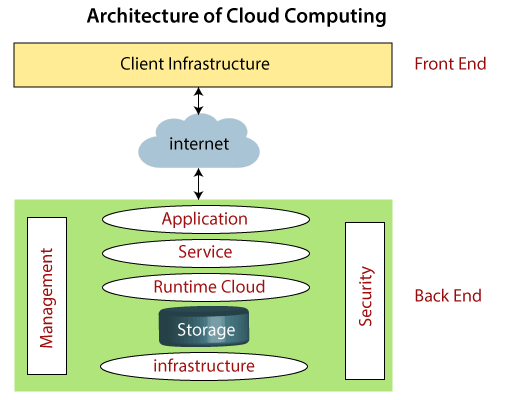

Basic Cloud Computing Architecture

We all know that both small and large businesses use cloud computing technology to store information in the cloud and provide access to it through an internet connection from any location at any time. The architecture of cloud computing combines event-driven architecture with service-oriented design.

The two sections of cloud computing architecture are as follows:

- Front end

- Back end

Front end

The customer use the front end. It includes programmes and client-side interfaces needed to access cloud computing systems. Web servers (such as Chrome, Firefox, Internet Explorer, etc.), thin & fat clients, tablets, and mobile devices make up the front end.

Back End

The service provider makes use of the back end. It oversees the management of all the resources needed to offer cloud computing services. It consists of an enormous quantity of data storage, security measures, virtual machines, servers, traffic management systems, etc.

Cloud Computing Architecture's Elements

The components of the cloud computing architecture are as follows:

Client Infrastructure: A part of the front end is the client infrastructure. It offers a GUI (Graphical User Interface) for cloud communication.

Application: Any software or platform that a client wishes to access may be the application.

Service According to the needs of the customer, a cloud service handles the services you may access. The three types of services that cloud computing provides are as follows:

i. SaaS (Software as a Service): Other names for it include cloud application services. SaaS apps often operate immediately through the web browser, eliminating the need for download and installation. Below are a few crucial SaaS examples: Google Apps, Salesforce, Dropbox, Slack, HubSpot, and Cisco WebEx are a few examples.

ii. PaaS, or platform as a service, Services for cloud platforms are another name for it. It is somewhat similar to SaaS, however PaaS offers a platform for software development, whereas SaaS allows us to access software through the internet without a platform. Examples include OpenShift, Force.com, Magento Commerce Cloud, and Windows Azure.

iii. IaaS, or infrastructure as a service, Services for cloud infrastructure are another name for it. It is in charge of overseeing runtime environments, middleware, and application data. Examples include Google Compute Engine (GCE), Cisco Metapod, and Amazon Web Services (AWS) EC2.

Runtime Cloud: Virtual machines receive the execution and runtime environment from Runtime Cloud.

Keeping: One of the most crucial elements of cloud computing is storage. It offers a sizable quantity of cloud storage space for managing and storing data.

Management: In the backend, management is utilised to provide coordination between components such applications, services, runtime clouds, storage, infrastructure, and other security problems.

Security Cloud: computing comes with built-in security measures. On the back end, a security mechanism is implemented.

Online: Front end and back end can interact and communicate with each other via the Internet.

Why should we use cloud architecture?

There are several reasons why businesses choose to use a cloud infrastructure. The most important is to:

- Speed up the release of new apps

- Utilize Kubernetes and other cloud-native architectures to upgrade apps and hasten the digital revolution.

- Ensure adherence to the most recent requirements.

- Increase resource transparency to reduce expenses and stop data breaches

- Enable quicker resource provisioning

- As business demands evolve, use hybrid cloud architecture to offer real-time scalability for apps.

- Meeting service goals on a regular basis

- Utilize cloud reference architecture to understand IT spending trends and cloud usage

What is the operation of cloud architecture?

There are a few conventional cloud architectural models, but no two clouds are exactly the same. Public, private, hybrid, and multi-cloud architectures are among them. How do they contrast, then?

Public cloud architecture: A cloud services provider owns and oversees the computing resources in a public cloud architecture. Through the Internet, these resources are pooled and disseminated across many tenants. Reduced operational expenses, simple scalability, and little to no maintenance are benefits of the public cloud.

Private cloud architecture: A private cloud is one that is privately owned and controlled, typically in a business's own on-site data centre. Private cloud, however, can also incorporate a number of server sites or rented space in colocation facilities spread out geographically. Private cloud architectures can be more flexible and provide stringent data security and compliance options, though they are typically more expensive than public cloud solutions.

Hybrid cloud architecture: A hybrid cloud system combines the operational efficacy of a public cloud with the data security abilities of a private cloud. Hybrid clouds let companies consolidate IT resources while facilitating workload migration between environments based on their IT and data security requirements. Hybrid clouds use both public and private cloud architectures.

Cloud architecture's foundational elements consist of:

Virtualization: The virtualization of servers, storage, and networks is the foundation of clouds. A software-based, or "virtual," version of a physical resource, including servers or storage, is referred to as a "virtualized resource." Through the utilization of this abstraction layer, various applications may share the same physical resources, boosting the effectiveness of servers, storage, and networking throughout the whole organization.

Middleware: As in conventional data centers, middleware enables networked computers, applications, and software to connect with one another through the use of databases and communications applications.

Management: These technologies make it possible to continuously monitor the performance and capacity of a cloud system. From a single platform, IT professionals can monitor use, roll out new apps, integrate data, and assure disaster recovery.

Infrastructure: Actual servers are present. All of the servers, persistent storage, and networking equipment found in conventional data centers are included in the cloud infrastructure.

Fundamental Cloud Architectures

I will outline and sketch many of the most prevalent fundamental cloud architecture models, with each illustrative of a typical application and feature of modern cloud-based systems. We'll look at how various cloud computing method combinations fit into these systems and how important they are.

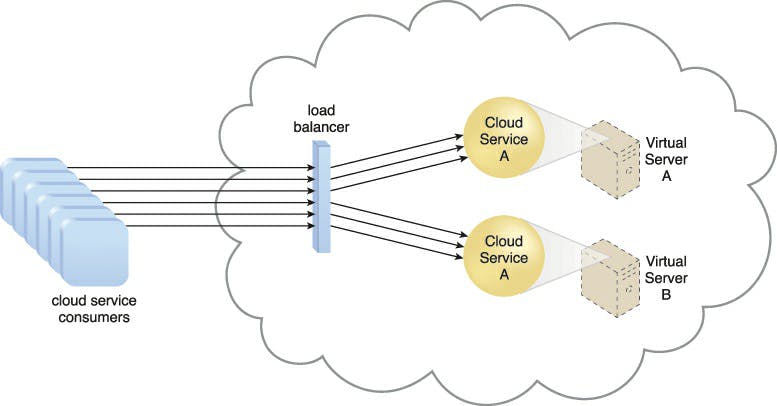

Workload Distribution Architecture

By adding one or more similar IT resources and a load balancer that offers runtime logic capable of dividing the burden fairly among the available IT resources, IT resources may be expanded horizontally. Depending on how sophisticated the load balancing algorithms and runtime logic are, the resulting workload distribution architecture decreases both overuse and underuse of IT resources.

Any IT resource can use this core architectural concept, with workload distribution often taking place in support of distributed virtual servers, cloud storage, and cloud services. Application of load balancing systems to particular IT resources typically results in specialized variations of this architecture that incorporate load balancing features, such as the service load balancing architecture, the load balanced virtual server architecture, and the load balanced virtual switches architecture.

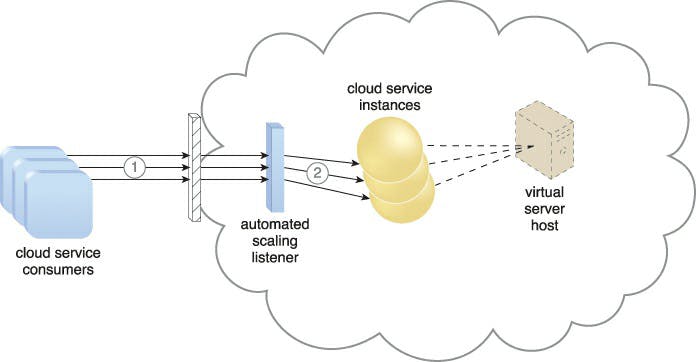

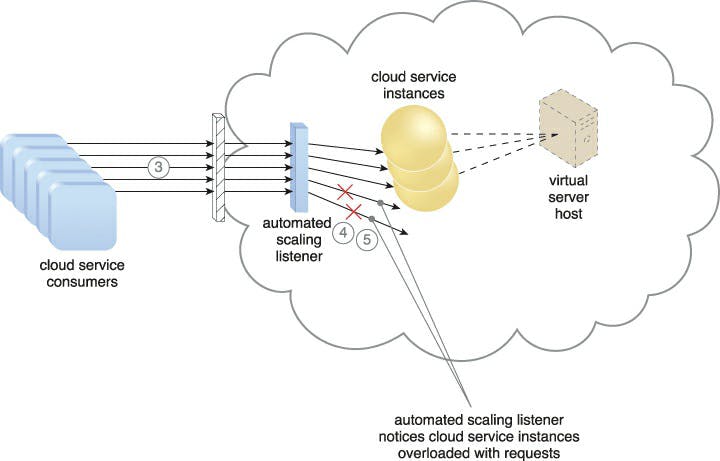

Dynamic Scalability Architecture

A system of established scaling conditions that cause the dynamic allocation of IT resources from resource pools underpin the dynamic scalability architecture, which is an architectural model. Since unused IT resources are successfully recovered without the need for manual intervention, dynamic allocation permits varied utilisation as determined by consumption demand changes.

When additional IT resources must be added to the workload processing, the automatic scaling listener is set with workload thresholds. In line with the provisions of a specific cloud consumer's provisioning contract, this method can be equipped with logic that establishes how many more IT resources can be dynamically offered.

Dynamic scaling is frequently applied in the ways listed below:

• Dynamic horizontal scaling: To meet varying demands, IT resource instances are scaled out and in. According to needs and permissions, the automated scaling listener tracks requests and signals resource replication to start duplicating IT resources.

• Dynamic vertical scaling: When a single IT resource's processing capability has to be changed, instances of the IT resource are scaled up and down. For instance, a virtual server that is experiencing overload may have its memory boosted dynamically or get an additional processor core.

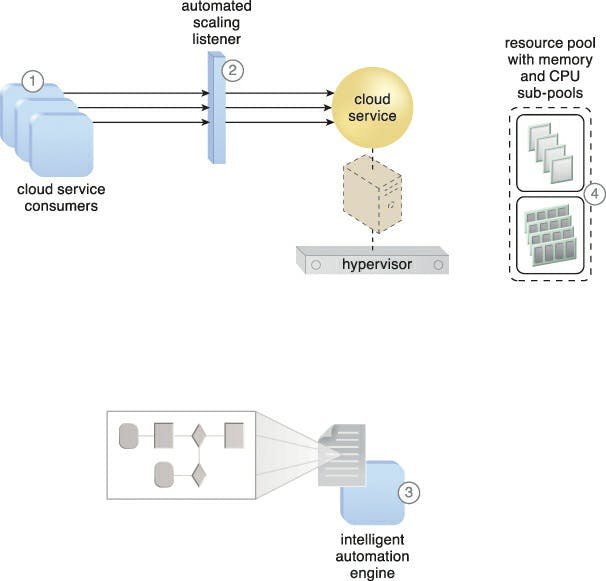

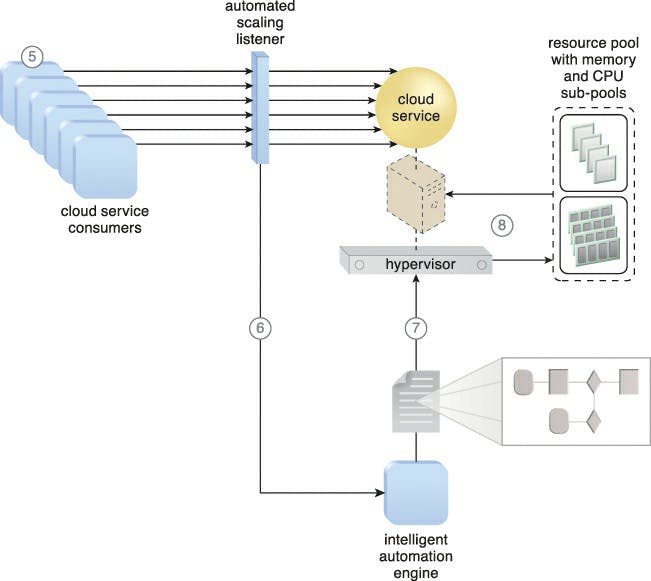

Architecture for Elastic Resource Capacity

The main function of the elastic resource capacity architecture is the dynamic provisioning of virtual servers with the aid of a system that allots and reassigns CPUs and RAM in respond to the evolving processing demands of hosted IT resources.

Scaling technology that communicates with the hypervisor and/or VIM uses resource pools to access and release CPU and RAM resources as needed. In order to dynamically allocate more processing power from the resource pool before capacity thresholds are reached, the runtime processing of the virtual server is monitored. Vertical scaling is then applied to the virtual server, the applications it hosts, and the IT resources as a result.

By using the VIM rather than the hypervisor directly, the intelligent automation engine script may request scalability in this kind of cloud architecture. Rebooting virtual servers that take part in elastic resource allocation systems may be necessary in order for the dynamic resource allocation to take effect.

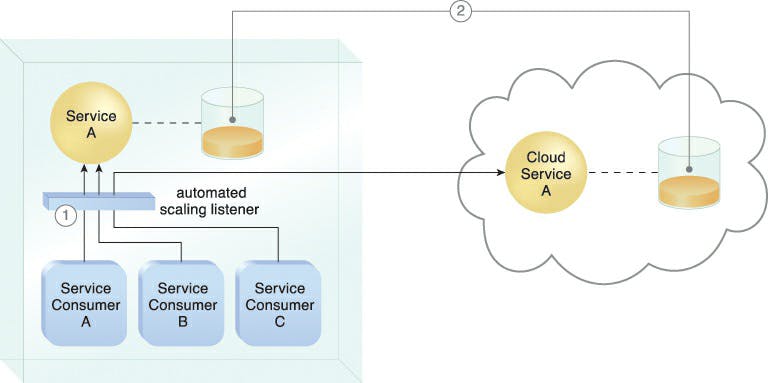

Cloud Bursting Architecture

When certain capacity limits are achieved, the cloud bursting architecture creates a type of dynamic scaling that grows or "bursts out" on-premise IT services into the cloud. While redundantly pre-deployed, the associated cloud-based IT resources are dormant until cloud bursting happens. When cloud-based IT resources are no longer needed, they become available and the architecture "bursts in" to the on-premise setting. As they're no longer needed, the cloud-based IT resources are released, and the architecture "bursts in" to the on-premise When cloud-based IT resources are no longer needed, they become available and the architecture "bursts in" to the on-premise setting.

Cloud bursting is a flexible scaling architecture that gives cloud users the choice to use cloud-based IT resources only when necessary to meet peak usage demands. This architectural model's core is built on techniques for autonomous scaling listeners and resource replication.

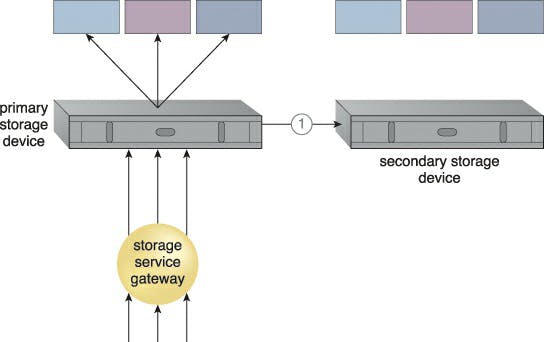

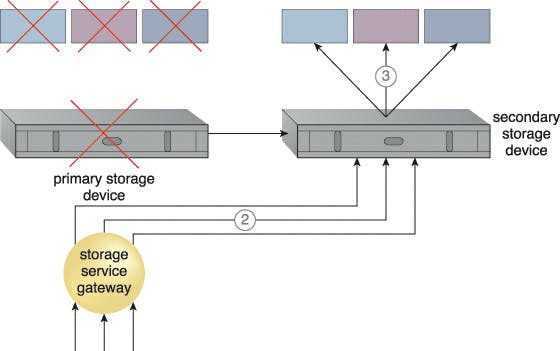

Redundant Storage Architecture

Occasionally, network connectivity difficulties, controller or general hardware failure, or security breaches can result in failures or interruptions of cloud storage devices. All services, apps, and cloud infrastructure components that depend on a cloud storage device's availability may experience failure as a result of its dependability being compromised.

The secondary duplicate cloud storage device in the redundant storage architecture provides a failover mechanism that synchronises its data with the data in the primary cloud storage device. When the primary device malfunctions, a storage service gateway redirects cloud consumer requests to the backup device.

A logical unit number (LUN) is a logical drive that represents a partition of a physical drive.

Usually for financial reasons, cloud providers may place secondary cloud storage devices in a different geographic area than the primary cloud storage unit. However, for particular data types, this could raise legal issues. Given that some replication transport protocols have distance constraints, the location of the secondary cloud storage devices may determine the protocol and synchronisation mechanism to be employed.

Although there are many different architectures, it would be arduous and uninteresting for new students to learn about them all. Therefore, I'll only add two of them in each category.

Advanced Cloud Architectures

In order to represent various, sophisticated architectural layers—many of which can be built upon the more fundamental environments established by the architectural models—the cloud technology architectures will be investigated.

Clustering Hypervisor Architecture

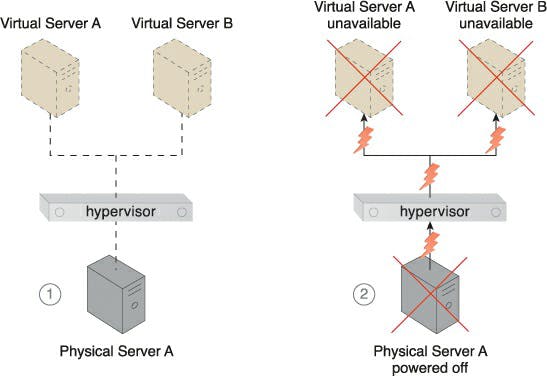

Multiple virtual servers may be created and hosted by hypervisors. A hypervisor's virtual servers may be affected by any failure circumstances that impact a hypervisor as a result of this reliance.

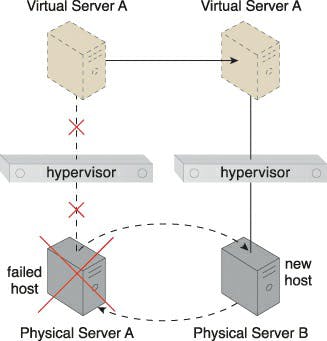

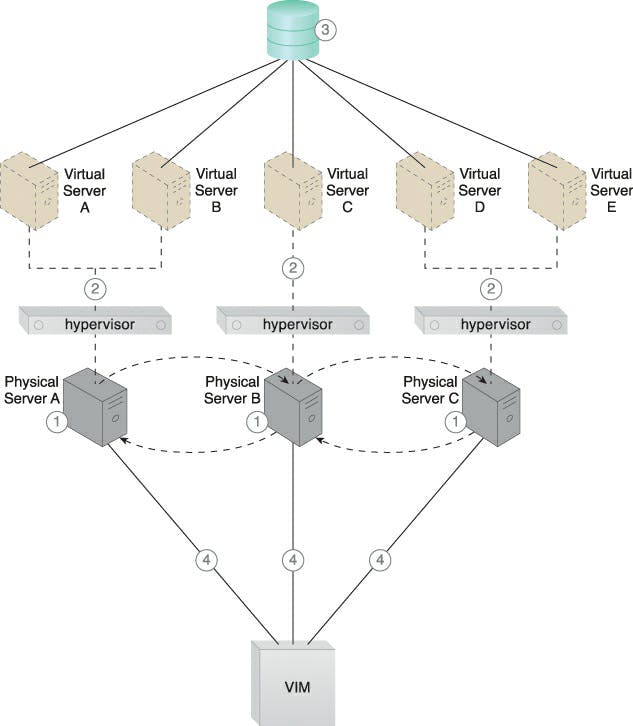

A high-availability cluster of hypervisors is created across several physical servers using the hypervisor clustering architecture. The hosted virtual servers can be relocated to another physical server or hypervisor to preserve runtime operations if a certain hypervisor or the physical server it is based on becomes unavailable.

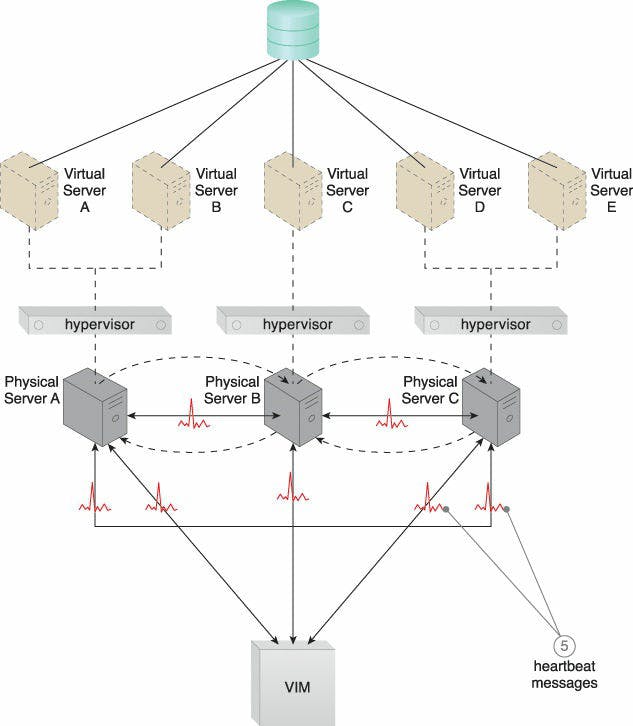

The central VIM, which manages the hypervisor cluster, periodically sends heartbeat messages to the hypervisors to make sure they are functioning properly. The VIM starts the live VM migration software to dynamically migrate the concerned virtual servers to a new host when it receives unacknowledged heartbeat messages.

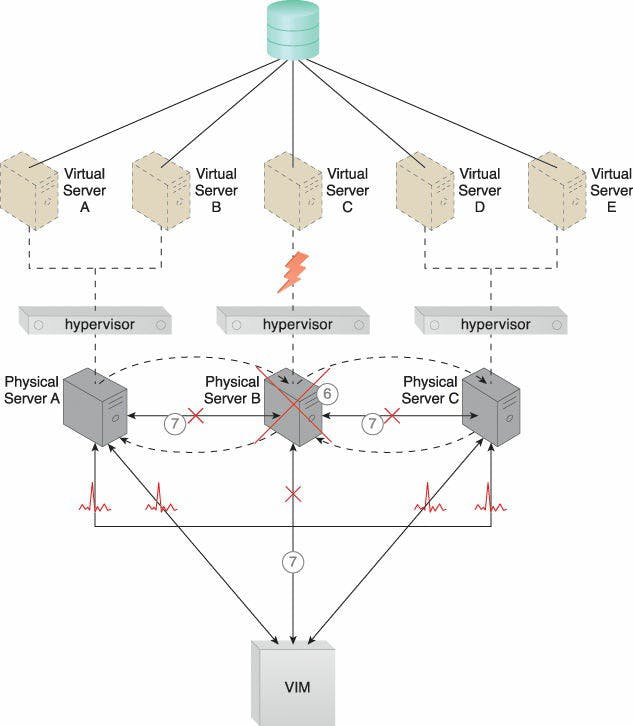

Caption: A, B, and C are the physical servers that have hypervisors installed (1). The hypervisors build virtual servers (2). It is possible for all hypervisors to access a shared cloud storage device that houses virtual server configuration data (3). Through a central VIM, the three physical server hosts have the hypervisor cluster activated (4).

Caption: The physical servers exchange heartbeat messages with one another and the VIM according to a pre-defined schedule (5).

Caption: Virtual Server C is put in danger when Physical Server B malfunctions and goes down (6). Physical Server B stops sending heartbeat messages to the VIM and the other physical servers (7).

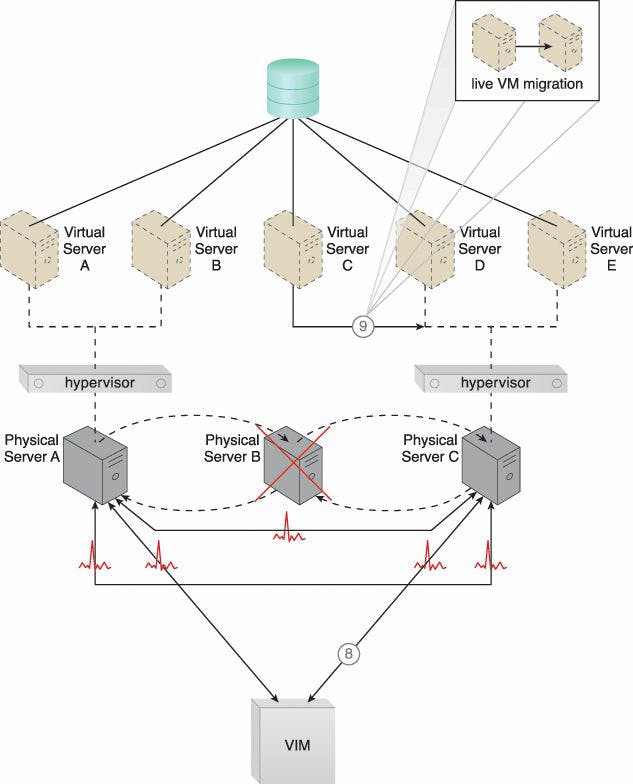

Caption: The VIM chooses Physical Server C as the new host to take ownership of Virtual Server C after assessing the available capacity of other hypervisors in the cluster (8). Virtual Server C is live-migrated to the hypervisor running on Physical Server C, where restarting may be necessary before normal operations can be resumed (9).

Non-Disruptive Service Relocation Architecture

Several factors might cause a cloud service to go offline, including: runtime consumption demands that exceed its processing capability; maintenance updates that need a brief outage; and permanent relocation to a new physical server host.

If a cloud service goes unavailable, consumer requests are often denied, which might lead to exception situations. Even if the outage is scheduled, making the cloud service momentarily inaccessible to cloud users is not recommended.

The non-disruptive service relocation architecture creates a framework wherein a preset event initiates the replication or transfer of a cloud service implementation during runtime, preventing any disturbance.

By placing a duplicate implementation on a different host, cloud service activity can be momentarily redirected to a different hosting environment at runtime as opposed to scaling cloud services up or down with redundant implementations. Similar to this, when a maintenance outage is required for the primary implementation, client requests for cloud services can be momentarily routed to a backup installation. To support cloud service migrations to new physical server hosts, the relocation of the cloud service implementation and any cloud service activity may also be permanent.

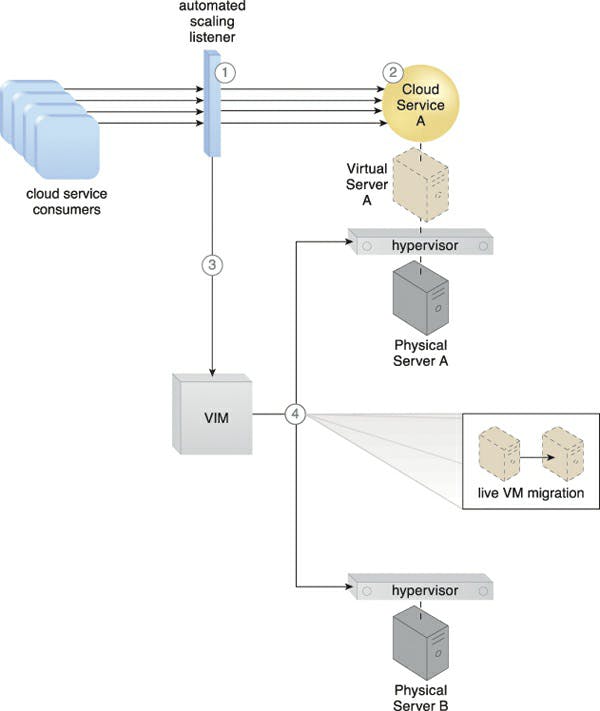

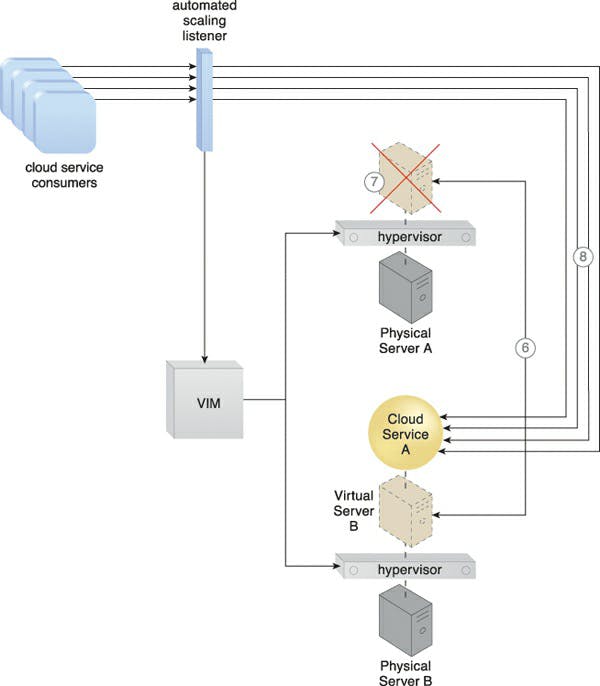

Caption: The workload for a cloud service is monitored by the autonomous scaling listener (1). As the workload grows (2), the cloud service's predetermined threshold is met, leading the automatic scaling listener to inform the VIM to begin relocation (3). The VIM instructs the destination and source hypervisors to perform runtime relocation using the live VM migration application (4).

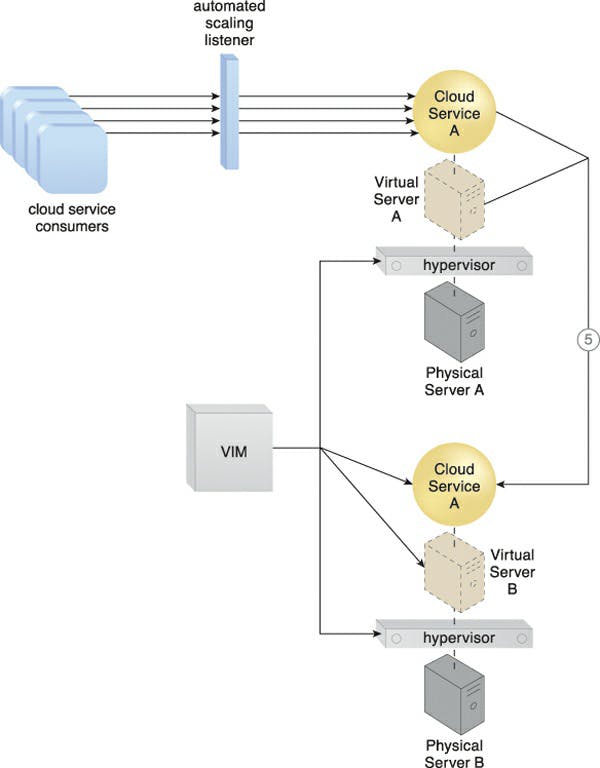

Caption: A second copy of the virtual server and its hosted cloud service are created via the destination hypervisor on Physical Server B (5).

Caption: Both virtual server instances' states are synced (6). After customer requests for the cloud service are determined to have been successfully exchanged with the cloud service on Physical Server B, the first virtual server instance is withdrawn from Physical Server A. (7). Consumer requests for cloud services are now restricted to the cloud service on Physical Server B. (8).

Depending on the location of the virtual server's discs and configuration, one of the following two methods for virtual server relocation may be used:

• If the virtual server discs are stored on a local storage device or non-shared remote storage device associated to the source host, a copy of the virtual server discs is produced on the destination host.

• If the virtual server's files are stored on a remote storage device that is shared between origin and destination hosts, then copying the virtual server discs is not necessary. After the copy has been made, both virtual server instances are synchronised, and the virtual server files are removed from the origin host.

Specialized Cloud Architectures

Despite the wide variety of structures, it would be difficult and boring for new pupils to become familiar with them all. I'll only include two of them in each category as a result.

Direct I/O Access Architecture

I/O Access Architecture for Direct I/O virtualization is a hypervisor-based processing layer that often gives hosted virtual servers access to the physical I/O cards that are installed on a physical server. Virtual servers, however, may require direct access to and usage of I/O devices, independent of the hypervisor or any emulation software.

By using the direct I/O access architecture, virtual servers are given the option of bypassing the hypervisor and connecting directly to the physical server's I/O card rather than simulating a connection through the hypervisor.

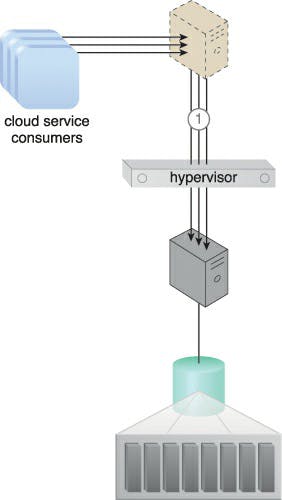

Caption: Customers of cloud services connect to a virtual server, which connects to a database on a SAN storage LUN (1). A virtual switch is used to connect the virtual server to the database.

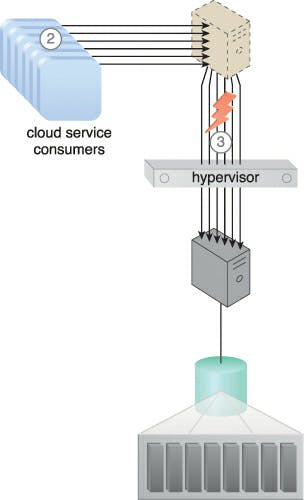

Caption: There is an increase in the amount of cloud service consumer requests (2), causing the bandwidth and performance of the virtual switch to become inadequate (3).

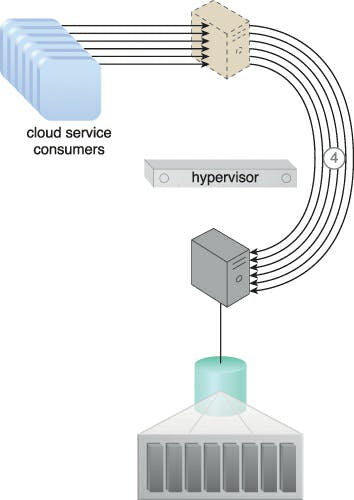

Caption: The virtual server bypasses the hypervisor to connect to the database server via a direct physical link to the physical server (4). The increased workload can now be properly handled.

The host CPU must support this form of access with the proper drivers installed on the virtual server in order to implement this approach and access the physical I/O card without hypervisor intervention. After the drivers are set up, the virtual server may identify the I/O card as a hardware component.

Dynamic Data Normalization Architecture

In cloud-based environments, redundant data can lead to a number of problems, including: increased time needed to store and catalogue files; increased need for storage and backup space; increased costs due to increased data volume; increased time needed for replication to secondary storage; and increased time needed for data backup.

As an illustration, if a cloud user transfers 100 MB of data redundantly ten times onto a cloud storage device, the results might be severe:

• The cloud customer will be charged even though only 100 MB of unique data was actually stored, for utilizing 10 x 100 MB of storage space.

• Significantly more time is needed to store and classify data; • The cloud provider must give 900 MB of extra capacity in the online cloud storage device and any backup storage systems.

• When a site recovery is performed by a cloud provider, 1,000 MB of data must be replicated rather than just 100 MB, which increases data replication time and performance.

By identifying and removing duplicate data from cloud storage devices, the dynamic data normalisation architecture creates a de-duplication mechanism that protects cloud users from unintentionally keeping multiple copies of data. Both block- and file-based storage devices can use this technology, although the former is where it performs best. Each block that is received is examined by this de-duplication algorithm to see if it duplicates a block that has previously been received. Pointers to the corresponding blocks that are already in storage are used to replace redundant blocks.

Caption: Redundant data sets are unnecessarily filling up storage (left). The data is normalised by the data deduplication technology so that only unique data is saved (right).

Data is examined by the de-duplication system before being sent to storage controllers. Every item of processed and stored data receives a hash code as part of the inspection process. Additionally, a hash and piece index is kept up to date. In order to detect whether a freshly received block of data is new or duplicate, the produced hash of the block is compared to the hashes in storage. While duplicate data is removed and a reference to the original data block is constructed and saved in its place, new blocks are saved.

Why is cloud architecture advantageous?

Organizations may lessen or do away with their dependency on on-premises server, storage, and networking infrastructure thanks to cloud computing design. When an organization adopts cloud architecture, it frequently moves IT resources to the public cloud, doing away with the need for on-premises servers, storage, and power and cooling requirements for IT data centers in favor of a monthly IT expense.

A significant factor in the current popularity of cloud computing is the change from capital investment to operational expense. Organizations are moving to the cloud due to three main forms of cloud architecture. Each of these has unique advantages and salient characteristics.

Software as a Service (SaaS) providers distribute and manage programs and applications to enterprises through the Internet, doing away with the requirement for end users to install the software locally. SaaS apps are often accessed using a web interface, which is accessible from a wide range of hardware and operating systems.

Platform as a Service (PaaS) is a cloud computing approach where the service provider provides middleware and a computing platform as a service. On top of the platform, businesses may construct an application or service. While the end user manages software deployment and configuration settings, the cloud service provider supplies the networks, servers, and storage needed to run an application.

Infrastructure as a Service (IaaS): In this most basic form of the cloud, a third-party provider provides the required infrastructure so that businesses don't have to buy servers, networks, or storage devices. Organizations, in turn, control their software and applications and only pay for the capacity they actually use.

What are optimal practices for cloud architecture?

More than just a technical need, a well-designed cloud framework is a means of achieving lower operational costs, high-performing applications, and contented consumers. Organizations can guarantee they get actual business value from their cloud expenditures and future-proof their IT infrastructure by adhering to cloud architectural principles and best practices.

- Prior planning: Make sure the capacity requirements are recognized while creating a cloud architecture. Continuously test performance as businesses start to develop their architecture to prevent unanticipated hiccups in production.

Security first: By securing all levels of the cloud infrastructure with data encryption, patch management, and stringent regulations, you can protect clouds from hackers and unauthorized users. For the greatest levels of security throughout the hybrid, multi-cloud company, take into account zero-trust security models.

Increase performance Utilize and manage the appropriate computing resources by regularly assessing business requirements and technological requirements.

Reduce costs by utilising managed service providers, automated processes, and utilisation tracking to cut back on unused cloud computing costs.

Folks, that's all for today! The article was enjoyable, I hope. Happy hunting!